Harsh Muriki

I am a BS/MS student in Robotics at Georgia Tech advised by Dr. Sonia Chernova , where I focus on Task and Motion Planning (TAMP) using VLMs. I am also working as a Graduate Teaching Assistant for CS 3630. Previously, I worked as a GRA with Dr. Yongsheng Chen on deep learning and computer vision in AgTech.

✉️ vmuriki3@gatech.edu

Research Interest: Task and Motion Planning (TAMP), Long-Horizon Mobile Manipulation, Large Vision-Language Models for Robotics, Embodied AI, Learning-Based Robotic Planning, Agricultural Robotics

Publications

Research Projects

Semantic Proactive Assistance for Households

3-D Spatio-Temporal mapping with Hello-Robot-Stretch to understand and track dynamic indoor environments.

View Project

Deep-Learning-Driven Lettuce Analysis

Collected the largest hyperspectral lettuce dataset and trained vision-transformer models to predict nutrient content and growth.

View Project

Robotic Phenotyping for Small Scale Urban Farms

3D Point-cloud processing and pose-estimation pipelines for accurate plant monitoring and robotic pollination.

View ProjectCourse Projects

IRL Project: HINTeract-Hint-Driven Hierarchical Learning for Robotic Furniture Assembly

Built a modular HRL framework where a Sawyer arm requests on-demand human hints to grasp, align, and insert parts, achieving sample-efficient toy-table assembly in Robosuite with 260 expert demos and open-sourced code.

View Project

MRS Project: CableCrop RL-Multi-Cable Robot Planning for Agricultural Data Capture

Designed a coordinated RGB+hyperspectral cable-robot pair and trained PPO/MAPPO agents in simulation to maximize crop coverage and data richness while dodging collisions for scalable precision-ag monitoring.

View Project

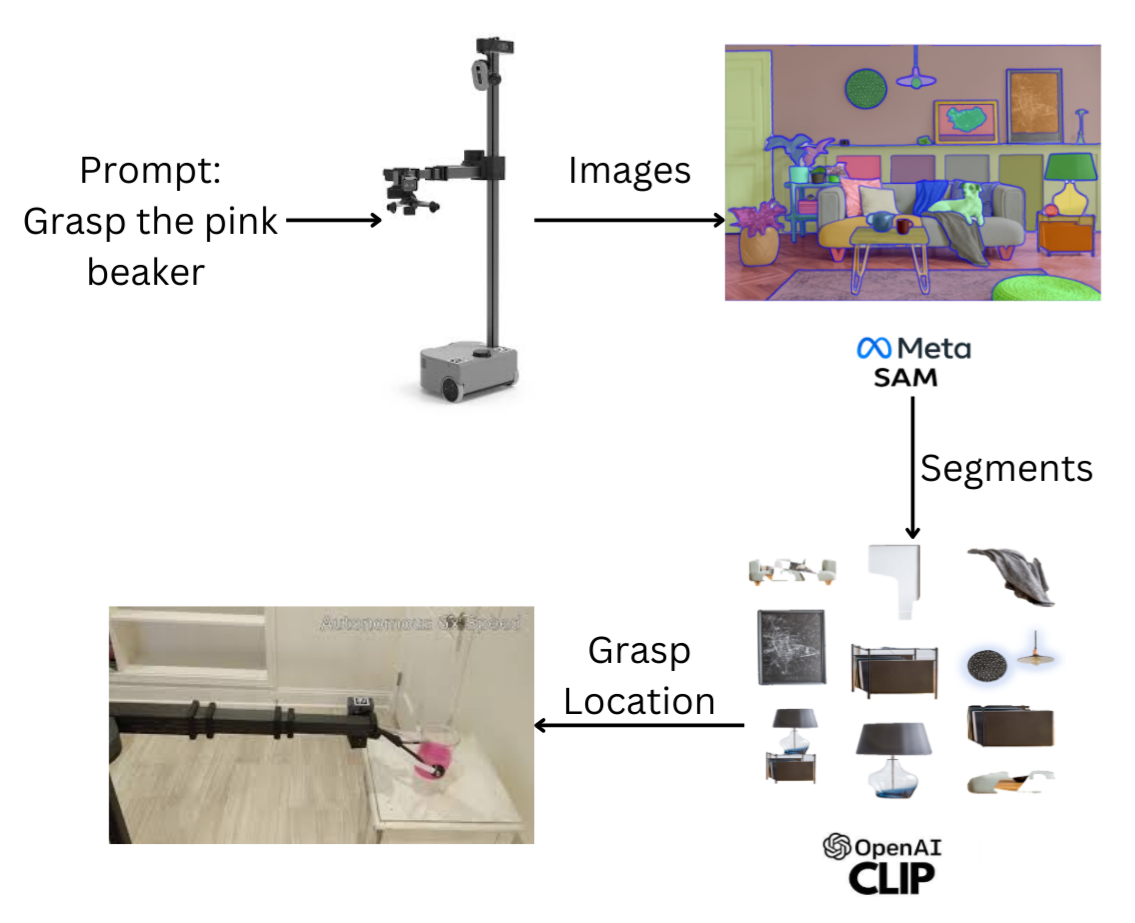

HRI Project: LM-NavGrasp-Language-Guided Navigation & Grasping on Hello Robot Stretch

Developed a pipeline that fuses large vision-language models (CLIP + SAM) with ROS-Nav so Stretch can parse a natural-language object description, autonomously navigate to the correct table, and execute a grasp on the specified item in cluttered lab scenes.

View Project